[SICP 1] History of Scheme

TL;DR in order to understand the concepts explained in "Structure and Interpretation of Computer Programs" (SICP) better I did some dive into history of Scheme programming language, and learned about former programming languages Lisp, Algol from 50s and the concepts they introduced to computer science such as recursion, garbage collection, lexical scoping and some other programming paradigms that Scheme uses such as actor model, lambda calculus, continuations, closures etc.

Introduction

I’m reading SICP as part of an impossible attempt to narrow down my knowledge gap on essential computer science (CS) topics about which I didn’t get a proper education. Instead of CS I studied physics at the college. After a career change, now I'm writing programs professionally and being liberally called a “software engineer” without any license. Hope text books will come to my rescue!

SICP is an introductory CS course and an accompanying text book from MIT’s EE&CS department from 1985. It teaches how to write programs using Scheme programming language which was also invented by the authors.

Scheme is a minimalist language with barely enough linguistic structure. Many mechanisms that come for free in other languages (as simple as type systems and classes) as part of their syntax need to be implemented manually by the Scheme programmer. Though any complex system can be developed by building upon well-designed abstractions.

This near intangible level of flexibility and lack of language constructs requires mastery and wisdom on the programmer’s side (or guidance for new comers). But this challenge pays of in some intuition about why certain language constructs were invented in the way they are in the first place. And that's the reason why I'm studying this course.

My introduction to programming started with me reading "The C Programming Language" by Ritchie and Kernighan which was mostly about memory manipulation to solve certain coding problems. And I can say that SICP’s approach is very different. It’s goal it to teach programming in general. Also, C’s design goal is to be a system’s programming language, hence it’s not realistic to expect it to be an introductory language.

Some History of Scheme

I’m also glad that my desire to learn programming properly sent me back in time to 80’s when SICP was delivered. SICP uses Scheme that was invented in 1975. Which stands on the shoulders of Lisp and Algol. Both of which were invented in 50’s and basically were two of first generation of proper programming languages. Before them, people only interacted with computers using machine code.

This presentation “All of this has happened before, and it will all happen again” by Mark Allen [video] is about the history and design goals of Lisp, Algol, Fortran and Cobol. (The title is from Peter Pan, but my generation knows it from Battlestar Galactica.) It’s an amazing talk overall, that explains how the perception of computers changed from glorified calculators to programmable machines with the introduction of these first generation languages that magically turn symbols into machine code!

LISP

Scheme took its syntax from Lisp (stands for LISt Processor). Lisp was invented by John McCarthy in late 59s, again at MIT while working at their Artificial Intelligence group. Published it in article “Recursive Functions of Symbolic Expressions and Their Computation by Machine, Part I” [Typeset version, Earlier Manuscript] Note that, Part 2 was going to demonstrate examples of algebraic computations, but it never came out and later algebraic packages were implemented by others according to the research direction set by McCarthy. Unlike today’s eager fans of Game of Thrones, those days the fans were more patient with authors. [podcast with GRR Martin]

Lisp’s design goals were:

- Symbolic computation: processing tokens/symbols as atomic units, in contrast to a language such as Fortran where the main goal is numeric computation. This feature made Lisp useful for AI studies.

- Using recursion and functional composition as main programming idioms.

- Garbage collection: managing memory automatically, releasing resources that are not needed. (Say we took only the first element in a list via

carand left the rest. Someone needs to remove the rest from the memory.)

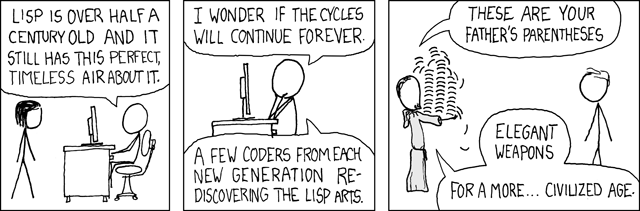

The famous (or infamous, depending on the viewpoint) parentheses, called S-Expressions (symbolic-expressions), were meant to be lower-level intermediate representations that are temporarily exposed to the users while the language is being developed. They emerged from the need of a notation to represent functions as data while Steve Russel was implementing the eval function for IBM 704 machine. He never envisioned or intended for actual LISP programs be written in S-Expressions.

Similarly, the infamous function names for list manipulation, car and cdr, that select the first element of the list and the tail of the list respectively, come from assembly language for this specific machine's “Contents of Address Register” and “Contents of Decrement Register” register names.

However, the more human-friendly version of LISP syntax, M-Expressions (meta-expressions), planned by McCarthy, was never realized. Their original intention was to have a compiler. Though, after learning from Fortran community that their compiler took 30 man-years to produce McCarthy thought that “it didn’t seem like these gradate students would hold still long enough” and went with an interpreted language and S-Expressions stayed with us to this day. I wonder whether there is any LISP successors out there that uses M-Expressions now...

; s-expression function call

(f x y)

; corresponding m-expression function call

f[x;y]

Algol

Scheme took lexical scoping and code blocking ideas from Algol. Similar to recursion and garbage collection from Lisp, these two concepts are so ubiquitous today that it is surprising to hear that they were invented by someone at some point in the history.

Code blocking is the idea of grouping multiple statements together in a block. For example, in “structured programming” the body of a function is a block. Blocks define boundaries in a program code. While entering and existing blocks scopes change.

A scope is a region where names are bounded to entities. Blocks have their own scopes. So, variable name x can mean something in one block and mean something else in another block. This allows program development in collaboration where each module can be developed in its own scope independently without having name clashes in a global scope.

Lexical scoping is a type of static scoping in a language that allows nested blocks. An example of a nested block is a function definition inside a function definition. In lexical scoping the binding of a name is determined by the closest scope it’s in. If outer function declares a parameter x, then x inside of the inner function refers to the x of the outer function (as long as it is not declared in the inner function).

(define (make-add-n n)

(define (add-n x)

; here n is not a parameter of the inner function add-n

; hence its binding comes from the outer function make-add-n

(+ x n))

add-n)

Scheme was the first LISP dialect that implemented lexical scoping.

Algol’s design goal was to be “as close as possible to standard mathematical notation and be readable with little further explanation”. Also following are some of programming concepts Algol introduced the first time

- formally specified grammar (in Backus–Naur form)

- call-by-name lazy evaluation

- primitive boolean data type

Actor Model

Another root of Scheme’s history is in Planner programming language, designed by Carl Hewitt in 1969 (again at MIT). The language itself was invented to do automated planning, which is a branch of AI research about deciding on action sequences to execute strategies by autonomous agents (like control theory but happens in complex and dynamically unknown environments.)

The relationship between Planner and Scheme is not in Planner’s goals but through the mechanism it does concurrent computations called “Actor Model” which was invented by Hewitt in 1972.

An actor is the main unit of a parallel computation similar to an object being the main unit of computation in Object Oriented Programming (OOP) paradigm. An actor has its local state (and probably local computing resources) and receives messages from fellow actors. Upon receiving a message it can update its local state (including behavioral changes about how to react future messages), send messages to other actors and create other actors.

This paradigm helps with common problems that occur in concurrent programming, for examples it removes the need of lock-based synchronization, for reasons beyond my current understanding. First time I learned about this computational model was the Actors chapter of “Exercises in Programming Style” by Cristina Videira Lopes.

Also apparently Twitter has used Scala to implement Actors for their queuing system that handles the processing of tweets sent via their web interface. How Twitter Is Scaling, 2009.

Scheme

Scheme was born by the interest of an MIT graduate student, Steele, who was following the progress of Planner. He and Sussman wanted to study and understand actor model by implementing it in their own toy LISP language with lexical scoping functionality based on lambda calculus.

They called their system Schemer as a reference to Planner. Later the “r” at the end was dropped due to the six-character limit restriction of the file system of their computer. I guess if anyone wants to implement the next LISP dialect they should look up the verbs “plan” and “scheme” in thesaurus and choose a homonym. (There was even another one call Conniver. Dictionary says that "to connive" means to conspire and cooperate secretly.)

After they implemented their interpreter and wrote some actors with it they realized that “the program fragments in apply that implements function application and actor invocation were identical! ... Functions implemented via lambda which return values, and actors implemented via alpha which invoke continuations, and since those two mechanisms had identical structure they concluded that “actors and closures were effectively the same concept”.

Which led them to the conclusion that “all the patterns of control structure that Hewitt had described in terms of actors could equally well be described by the λ-calculus.” and hence “λ-calculus—a small, simple formalism—could serve as the core of a powerful and expressive programming language.” [Quotes from "The First Report on Scheme Revisited", PDF on Webarchive]

(SICP teaches how let, which provides means of lexical scoping, can be implement using lambda. I’ll talk about that later).

“As the MIT school had struggled to find more and more powerful ways to express and manipulate control structure to support heuristic search, we had progressed from Lisp to CONVERT to Planner to Conniver to PLASMA to a simple variation of Lisp!” So, the minimalism of Scheme was not a design goal but an unforeseen outcome of research progress and an inclination towards finding a core principle upon which all programming constructs can be built.

A modern use-case of continuations that I know of is generators. I was introduced them in Python. The idea in Python is that a function can be paused/resumed in its execution using yield keyword. Since functions are first-class, this makes continuations first-class too, I guess. [See Stack Overflow question What does the "yield" keyword do?]

Some use-cases of continuations are exception handling and co-routines. If the language has continuations as objects this allows implementation of these use-cases straight forward. I guess, continuations give the ability to return early for edge cases in Scheme similar to imperative languages. I’m looking forward to read about continuations in Scheme in SICP!

As a final gossip point, apparently, Hewitt did not like putting lambda calculus at the basis of programming. Saying that while λ-calculus can express some sequential and parallel control structures it is not well suited for expressing concurrency in Actor model. On the other hand, actor model is completely capable expressing anything that can be expressed via λ-calculus. Though, AFAI see, that is not a downside per se, because Scheme started to have its own life, independent of the historical conditions that ignited its birth.

I find this story as a great example of unexpected important/useful result of playfully scratching where intellectual curiosity itches. Maybe they didn’t contribute to the actor model paradigm but discovered a minimalist but powerful language.

Later

Steele and Sussmand started publishing their discoveries in a series of articles from 1975 to 1980 called “Lambda Papers” which can be found on Web Archive for readscheme.org. Using those ideas Steele wrote the first Scheme compiler as his master’s thesis.

Scheme that was born in 1975 is still alive after becoming an IEEE and ANSI standard in 1991. (Latest standard is called R7RS).

The smallness and simplicity of Scheme made it useful for teaching and language design experimentation. Sussman and Abelson started an undergraduate computing course and published “Structure and Interpretation of Computer Programs” as its textbook in 1984.

AFAIK, currently Racket is the easiest to access, multi-platform spin-off of Scheme. Their website calls it "world's first ecosystem for language-oriented programming." I’m using Racket to study SICP. It has a #lang sicp package which is not 100% compatible with MIT Scheme, however, for most problems, with some additional helper functions manually written, I was able to produce answers that can run.

In the next post of this series I'm planning to write about SICP and Scheme in general. And then I'll start sharing my understandings from individual chapters.

published at: 2020-08-01 17:02 edited at: 2020-08-06 21:31 UTC-5tags: